Migrating 30 services to Azure App Configuration

App Configuration is a simple but powerful service from Azure. It lets you store key-value settings and manage feature flags. While feature flags can include logic to compute their value dynamically, configuration in App Configuration is just what it sounds like: a key-value store for your application settings.

Even though the service is easy to use, the benefits are huge. First, it gives you a centralized source of truth for your configuration. Second, and perhaps more importantly, it allows you to update settings on the fly - no redeployments required.

Managing local configurations, such as appsettings.json and functionsettings.json, is manageable with a few services. But what happens when your ecosystem grows to 10, 20, or even 30 services?

Now imagine this: you need to change a single configuration key that is used across all your services. In the best-case scenario, you go through CI/CD pipelines one by one and manually update the value. In the worst case? You have to open every repository, commit new changes, and merge them to the main branch just to push out a tiny config update. That’s not just tedious, it’s risky. Each manual touch-point introduces the potential for inconsistency and human error.

Sound familiar?

In this post, I’ll walk you through how we migrated 30+ services from local config files to Azure App Configuration. I’ll share what we learned, what surprised us, and what I’d do differently if I had to do it again.

App Configuration setup

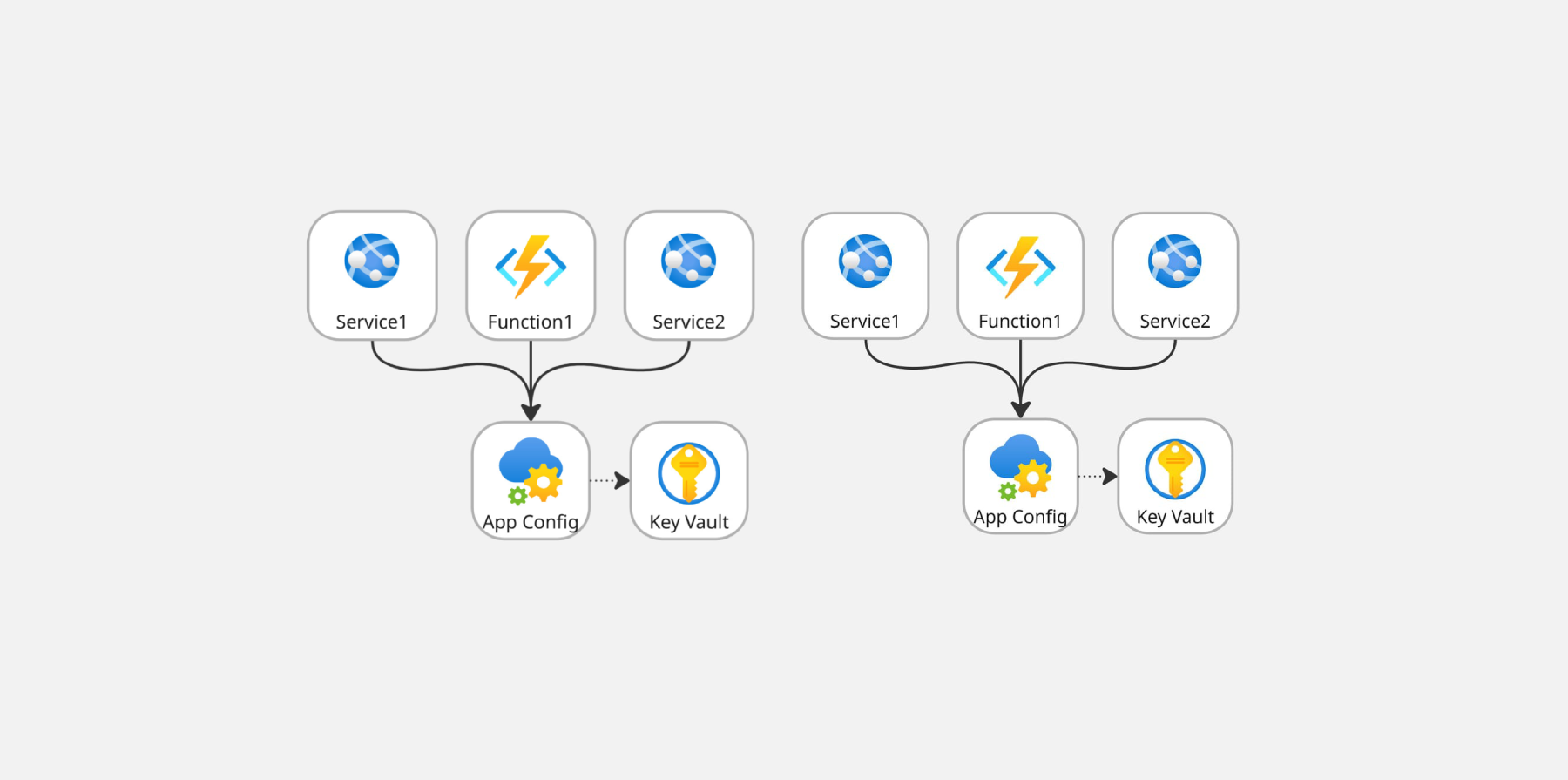

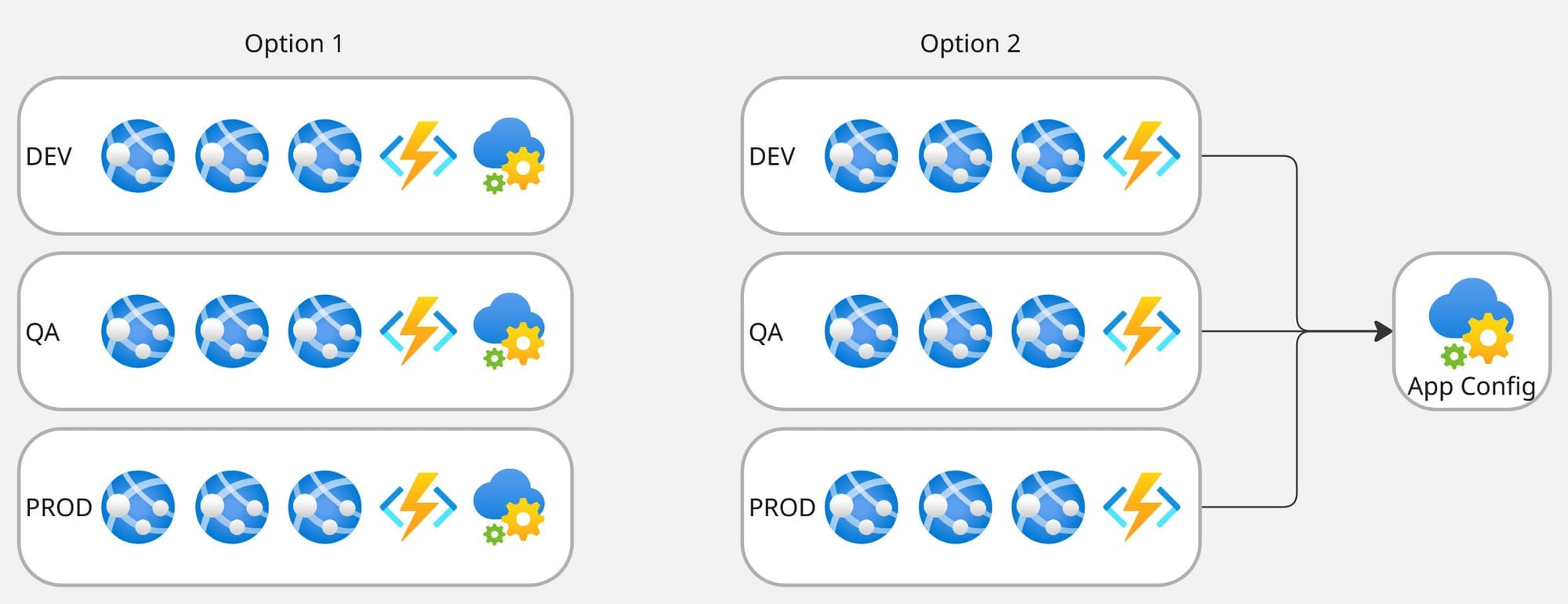

Our ecosystem has several stages for services: development, QA, UAT, and production. When using Azure App Configuration, you typically have two options for organizing settings across environments:

- Use a separate App Configuration instance for each environment and rely on labels to separate values by services.

- Use a single instance and rely on labels to separate values by stage, and prefixes to separate values by services.

Option 1: A Separate App Configuration per Environment

✅ Fine-grained access control. You can set different permissions for each environment, which is useful for protecting production settings.

✅ Better use of labels. Since labels aren’t used for environments, you can use them to group settings by service. This makes migrations from local configs easier - no need to change key names or add prefixes in your code.

⚠️ Higher cost for small systems. Each App Configuration instance has a base cost. If your infrastructure is small, this can be less cost-effective.

⚠️ Need to manage promotions. This isn't always a downside, but it does mean you need to think about how to promote configuration changes from lower to higher environments.

Option 2. A Single App Configuration Instance with Labels

✅ Cost savings. This depends on how often your services call App Configuration. The pricing model includes a base cost plus a charge based on usage, the first 200,000 requests per month are free, so it may be cost-effective in smaller systems.

✅ Centralized source of truth. All your configuration lives in one place. You can also create shared keys used across multiple environments.

⚠️ Limited access control. If you store sensitive values (ideally using Key Vault references), you can’t restrict access by label. Access is granted to the entire App Configuration instance, so all keys are accessible.

⚠️ Label limitations. You must use labels to separate values by environment. That means you can’t also use labels to group settings by service. As a result, each service must use a unique key prefix to avoid conflicts, which usually means changing code.

What We Chose and Why

We decided to go with separate App Configuration instances for each environment. The main reason: we didn’t want to modify existing code to add prefixes when reading configuration. This approach also gives us better control over access to sensitive values, depending on the environment.

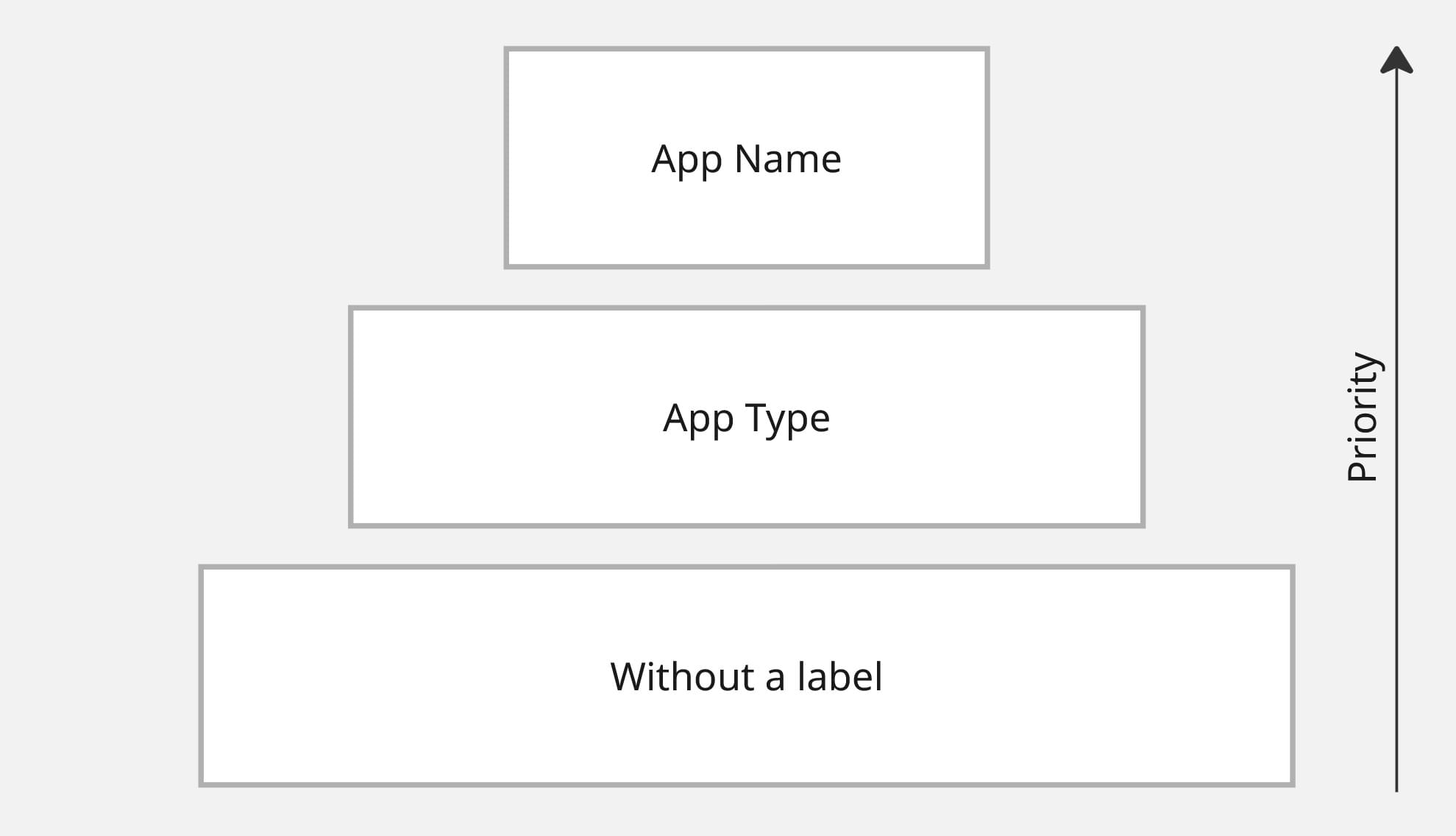

As for labels, we introduced a three-level fallback strategy. Each application loads settings in the following order, with later labels overriding earlier ones if the same key exists:

- No label – shared configuration for all apps.

- App type – for example: services, functions, etc.

- App name – settings specific to one service.

This structure allows us to define shared configuration across services and even across systems, without duplication.

Populating App Configuration

This was the most tedious, part of the migration. We had to go through each service, review its configuration files, and manually recreate the same keys and values in Azure App Configuration. While doing this, we also looked for opportunities to create shared keys that could be reused across services.

Once we finished copying the keys, we cleared the local config files and left only the connection string pointing to App Configuration.

Looking back, I realized something important: we should have built a tool to automate this process. Why? Because we ended up with around 400 keys, and it’s nearly impossible to migrate that much config by hand without making mistakes. I’m still pretty sure we missed something, even with triple-checking.

Promoting settings to higher environments

Once everything was populated in our first App Configuration instance, it was time to copy the data to other environments.

Thankfully, this part was easier. Azure provides an Import feature that lets you copy keys from one App Configuration instance to another.

There are a few helpful options when importing: you can filter by labels, for example. One limitation worth noting: you can only select up to 5 labels during import. I’m not entirely sure why this restriction exists, but it’s something to keep in mind.

Since we were working with newly created App Configuration instances, we simply imported all keys, then updated the environment-specific values where needed.

Minimizing risk during rollout

Before releasing any changes, we wanted to reduce risk as much as possible. With configuration, it’s easy to break everything if you accidentally release a bad value, and we wanted to avoid that.

Here’s what we did to make the rollout safer:

- Kept local production config as a fallback. We didn’t delete the existing production config files right away. If something was missing in App Configuration , the service could fall back to its local configuration file. This gave us a safety net.

- Built a comparison tool. I created a small tool that compares local configuration with values in App Configuration . It generates a report showing missing keys or mismatched values. This helped us verify everything before going live.

- Released services gradually. We didn’t want to block or interrupt other teams’ release schedules, so we had to plan a smooth, safe rollout process.

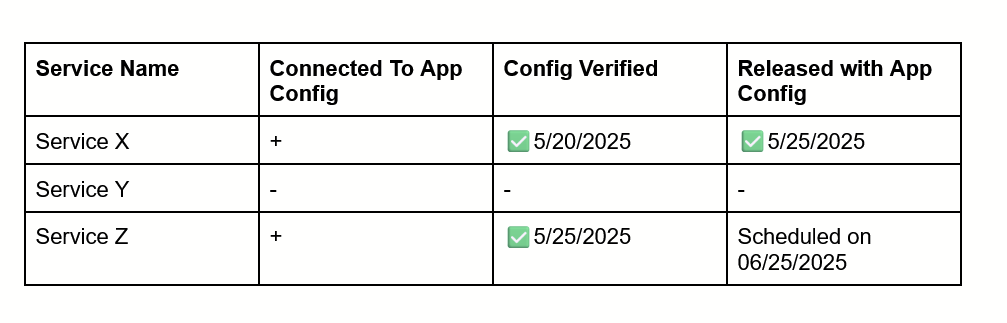

To coordinate all of this, we created a simple tracking table with: Service Name, Connected to App Configuration , Config Verified, Released with App Configuration.

Each service had a row, and we updated the table step-by-step:

- When a service was configured to read from App Configuration

- When its config passed the comparison tool checks

- When it was actually released to production using App Configuration

Each service should have been checked with the tool before the release.

This table paid off quickly. At one point, we needed to update a configuration key across all services very urgently. Instead of guessing or checking manually, we used the table to instantly see which services were using App Config to understand how we can update the configuration.

Outcome

Most of our services have already been migrated, and a few are queued for release. Let’s take a step back and look at why this migration mattered, and what we gained from it.

Centralized Configuration Management. With a single place to manage key settings, we eliminated the need to open dozens of repositories for small updates. This improved developer efficiency and reduced the risk of configuration drift between services.

Impact: changing a global setting now takes minutes, not hours.

Safer and faster releases. We decoupled the configuration from the deployment. Now we can adjust settings on the fly without triggering a full CI/CD pipeline or risking downtime. There is no risk in introducing unfinished features when you need to change only a configuration.

Impact: we reduced the number of emergency hotfixes due to bad configs, especially during off-hours. The time for resolving the incidents related to bad configurations was dramatically decreased

Enabled Feature Flag adoption. The migration laid the foundation for systematic use of feature flags. It decouples feature releases from deployment, making deployment faster and safer. It's a big topic for a separate post.

Impact: we started gating risky features behind flags, reducing the blast radius of releases.

Role-Based Operational Flexibility. App Configuration enabled us to delegate specific responsibilities to different roles.

Impact: operations gained autonomy. Token rotation, performance tuning, and incident response actions can now be executed in real time by the right people, without merging code or redeploying services.

Things to consider when using App Configuration

Before fully switching to Azure App Configuration, here are a few things you’ll want to think about:

- Do you really need dynamic configuration? You probably don’t want to query App Configuration every second. In many cases, it’s better to load configuration at startup and cache it. Consider how often your settings actually change.

- Cache expiration matters. Decide what cache expiration interval makes sense for your app settings and feature flags. Shorter intervals mean more up-to-date values, but also more requests.

- Understand request quotas. On the Standard tier, App Configuration allows 30,000 requests per hour. Keep that in mind when deciding how often your services should refresh their config.

- Plan for outages, even small ones. App Configuration has an SLA of 99.99%, but no one wants to be hit by the remaining 0.01%. The SDK uses cached values if the service is temporarily unavailable, but for better reliability, consider using App Configuration Replicas. They’re relatively cheap, around $1.20 per day per replica on the Standard tier, and can make a big difference.

- Cross-team coordination. If you have multiple teams working on the same product, think about how you’ll propagate config changes across teams. Who owns which keys? Who reviews changes?

- Promoting changes across environments. You’ll also need a policy for promoting config changes from development to higher environments like QA and production. Think about your promotion flow just like you do for code.

Final thoughts

Migrating to Azure App Configuration gave us a more scalable, flexible, and maintainable setup, but it also required careful planning and coordination. If you’re just getting started, I hope this post helps you avoid some of the common pitfalls and gives you a solid foundation to build on.

How do you manage configuration in your projects? Have you tried App Configuration, or are you using a different approach? I’d love to hear what’s working for you.