LLMs are going to replace software engineers. Are they?

This post is not about healthcare, but it's about a very related topic. For the last two months, my feed has been full of posts claiming that AI is going to replace software engineers. And I won't lie, I use AI in my daily work a lot, and it really helps. But can it replace engineers?

My inner voice said, "Of course not." But was it a voice of wisdom or pride? Maybe it's time to quit the industry, buy a farm with llamas (fluffy ones, not another model), and let AI build our future. So, I decided to run a little experiment.

Let's build something

I know LLMs struggle with a big context, so throwing them to a complex codebase didn't sound fair. I needed something small and illustrative. What can be more illustrative than a video game? The limited number of entities and a few rules sound like a perfect playground for AI, right?

I set one rule: 80% of code should come from AI. The remaining 20% was my safety net, because otherwise, you end up in a dead end when the model refuses to pivot. Honestly, it reminded me how both AI and humans fall for the sunk cost fallacy.

The setup

My partner in crime - Chat GPT. I used two chats:

1) o4-mini, my prompt strategist. Helped me think through the game design and approach, but no code.

2) 04-mini-high, the “Employee of the Year”. It wrote almost every line of code used in the game.

The game idea

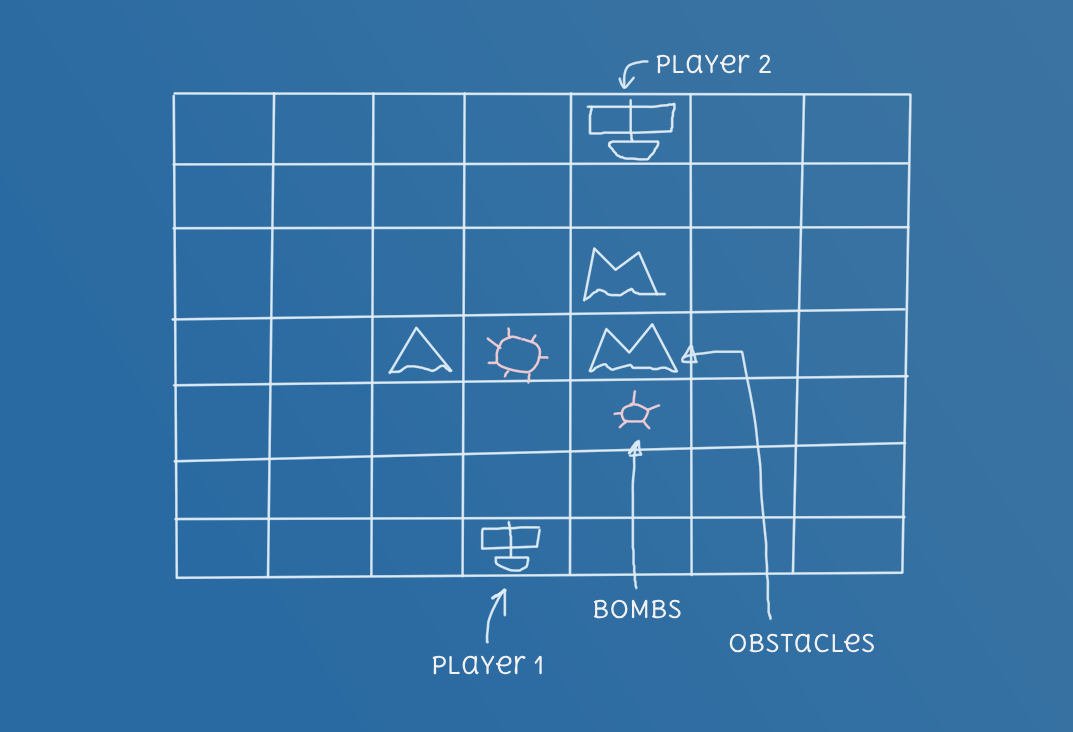

Let's explore the idea of the game we were going to implement. It should have been a turn-based game for 2 players with 8x8 grid. Each player controls a 1-cell ship. They start on opposite sides of the map and can move vertically or horizontally. Movement is determined by a dice roll at the start of the turn.

Every second turn, a player can place a bomb anywhere on the grid, but they can't put it on the ships. Bombs are visible only to players who planted them. Each player starts with 3 HP (health points) and loses 1 HP when stepping on a bomb.

Simple rules and clear mechanics - it should be perfect for LLM.

Development phase 1: Single-player

Here’s the exact prompt I used to kick things off:

You are an experienced unity developer. You prefer extendable, clean code. Let's make a game. Implement a Unity game for a 2-player, turn-based naval duel on a 8×8 grid. Each ship has 3 HP, slides up/down/left/right by a D6 roll until it hits an obstacle or its move limit, starts with 2 bombs (+1 every 2 turns). Include classes for Board, Player, Ship, Grid, Bomb, and methods for movement, bomb placement/detonation, turn management, and win conditions.

If you’ve never used Unity, quick heads-up: to have an object appear on screen, your class needs to inherit from MonoBehaviour. That’s the base class Unity uses to manage components on a scene.

Usually, especially in prototypes, this turns into a mess. Your objects end up doing everything: game logic, player input, rendering, and the Single Responsibility Principle quietly dies in the corner. At least my prototypes have experienced it every time.

That context was important for explaining the model's output to you. It gives me all the classes that I requested, but only one of them is inherited MonoBehaviour: Board.cs. That class became the orchestration manager, which handles the game loop: dice throwing, turns, and even ship moving.

At this point, I legitimately started browsing farm listings. That code was better than the code I'd write for a prototype, and it was generated in 25 seconds.

I was so excited and thought I needed another 60 seconds and would have my dream game ready to play. So, I asked the model to add a representation layer for each entity:

- Grid

- Ships

- Bombs

This is where things went downhill. It gave me code, but the dependencies were awful. I must correct almost each file, I had to ask the model:

... but should the grid renderer be responsible for displaying ships?

...stop putting everything inside Board.cs

At some point, I realized that the bigger the context, the harder it is for the model to generate good code. It generates methods that weren't reused, and it rewrites code that shouldn't be changed. It introduced wrong dependencies.

Eventually, I stopped asking “what should we do next?” and started giving surgical instructions:

Let's extend GridRenderer with a method that accepts a start position and direction, and it will handle path highlighting...

It took me two evenings to make a prototype that was playable and fulfilled all requirements. Without AI, it could have taken up to two weeks. Impressive, huh?

Do we still need engineers? I don’t know who else would have validated that code, caught the edge cases, or fixed the structural problems. Someone has to review the output. And that someone is… still an engineer.

Yes, it spits out code like a wizard. But sometimes, it spits out this:

foreach (var b in Board.GetType().GetField("bombs", System.Reflection.BindingFlags.NonPublic | System.Reflection.BindingFlags.Instance).GetValue(board) as List)

{

if (b.Owner == board.Players[board.CurrentPlayerIndex] && b.Position == pos) hazard = true;

}But you would - the code quality doesn't matter for a prototype, and I agree. But let's go to the final part, it can change your mind.

Development phase 2: Multiplayer

Since I finished the prototype five days ahead of schedule, I couldn’t resist the idea of building a multiplayer version. I decided to build a server using the tech I know best - ASP.NET.

I was still following the rule 80/20. The hardest thing for the model was creating an abstraction for the opponent, to hide details, whether it is a bot or another player is playing. It was a moment where I had to step in, because every time, when I was instructing it to build a correct abstraction, it made the code base... worse. So I stepped in, rewrote where I had to.

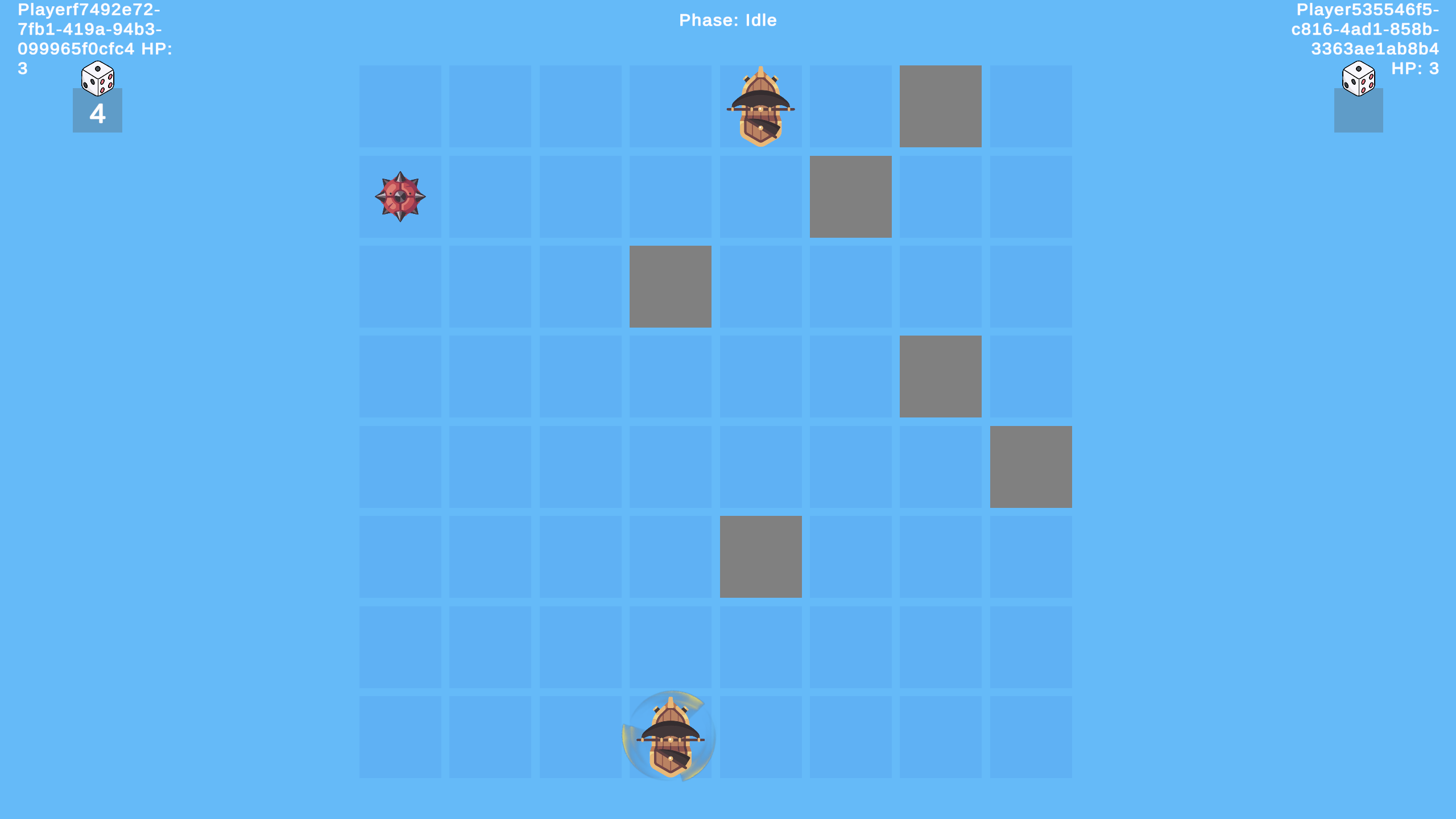

I won't waste your time by giving an infinite number of details on how we were implementing the multiplayer mode, but after a few evenings, I already had a prototype with a multiplayer mode. It felt amazing.

In a few more weeks, this could be an MVP. Not bad for a side experiment.

But there was a little moment that was worth mentioning. See this payload from the server

[

{"$type":"bomb","payload":{"x":0,"y":6},"id":2,"action":1},

{"$type":"move","payload":{"distance":4,"direction":3},"id":3,"action":0}

]Looks normal, right? If you’ve followed along, you already see the problem. But in case you haven’t: this payload includes the exact position of a bomb. That bomb was placed by one player and was supposed to be hidden from the opponent. The entire gameplay mechanic hinges on that secrecy.

Have I explicitly communicated to a model that I don't want to expose this data? No, I haven't. I just assumed it would understand. Because for me, as a human, it was obvious.

What if this weren't a game? What if it were your healthcare app? And the model decided, helpfully, to expose internal data that you thought was private? Still sure we don't need engineers?

Takeaways

So, will AI replace software developers? Not yet. Will LLMs help us build faster? That's obvious. I built a working game prototype and turned it into multiplayer for a few evenings. That's wild.

Will they change how we build? They already are. But we still need people who understand architecture, data privacy, abstractions, and why it's a bad idea to send bomb coordinates to your opponent.